Using This Number Predict The Experimental Yield

Holbox

Mar 24, 2025 · 6 min read

Table of Contents

- Using This Number Predict The Experimental Yield

- Table of Contents

- Using This Number: Predicting Experimental Yield – A Deep Dive into Data Analysis and Optimization

- Understanding the Concept of Experimental Yield

- Leveraging Data: The Foundation of Yield Prediction

- Statistical Modeling Techniques for Yield Prediction

- 1. Linear Regression

- 2. Multiple Linear Regression

- 3. Polynomial Regression

- 4. Non-Linear Regression

- 5. Machine Learning Techniques

- Feature Engineering and Variable Selection

- Model Evaluation and Validation

- Iterative Improvement and Optimization

- Beyond Statistical Models: Incorporating Process Knowledge

- Conclusion: The Power of Data-Driven Yield Prediction

- Latest Posts

- Latest Posts

- Related Post

Using This Number: Predicting Experimental Yield – A Deep Dive into Data Analysis and Optimization

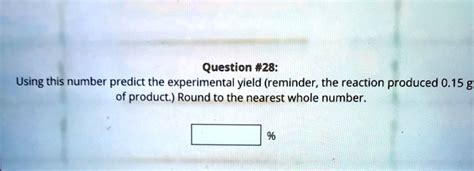

Predicting experimental yield is a cornerstone of scientific research and industrial processes. Whether you're a chemist synthesizing a new compound, a biologist cultivating cells, or an engineer optimizing a manufacturing process, accurately predicting yield is crucial for efficiency, cost-effectiveness, and success. This article delves into the multifaceted approaches used to predict experimental yield, focusing on the crucial role of data analysis and statistical modeling. We'll explore various techniques, highlighting their strengths and weaknesses, and discuss how to effectively leverage "this number" – whatever your relevant data point might be – to improve your predictions. The term "this number" is intentionally broad to encompass the diverse range of input variables utilized in yield prediction.

Understanding the Concept of Experimental Yield

Before we dive into predictive methods, let's clarify what we mean by "experimental yield." This refers to the amount of product obtained from a given experimental process, typically expressed as a percentage of the theoretical maximum yield. Several factors influence experimental yield, including:

- Reaction Conditions: Temperature, pressure, concentration of reactants, reaction time, and the presence of catalysts or inhibitors all significantly affect the outcome.

- Equipment and Methodology: The type of equipment used, the precision of measurements, and the adherence to established protocols all play a role.

- Raw Material Purity: Impurities in starting materials can hinder the reaction and reduce yield.

- Stochasticity: Even under controlled conditions, inherent randomness can introduce variations in the experimental yield.

Understanding these factors is vital for developing effective predictive models.

Leveraging Data: The Foundation of Yield Prediction

The most reliable approach to predicting experimental yield involves leveraging existing data. This data might encompass past experimental runs, pilot-scale trials, or even simulations. The effectiveness of your prediction directly correlates with the quality and quantity of your data. High-quality data is characterized by:

- Accuracy: Precise and reliable measurements are essential.

- Completeness: All relevant parameters should be recorded.

- Consistency: Data should be collected using consistent methods and protocols.

- Relevance: The data should accurately reflect the conditions and variables affecting yield.

Insufficient or low-quality data will lead to inaccurate or unreliable predictions.

Statistical Modeling Techniques for Yield Prediction

Once you have a robust dataset, several statistical techniques can be employed to develop predictive models. These techniques vary in complexity and applicability, depending on the characteristics of your data.

1. Linear Regression

Linear regression is a simple yet powerful technique suitable when the relationship between your independent variables ("this number" and other influencing factors) and the dependent variable (yield) is approximately linear. It involves fitting a straight line (or hyperplane in higher dimensions) through the data points to establish a relationship:

- Yield = β₀ + β₁X₁ + β₂X₂ + ... + βₙXₙ + ε

Where:

- Yield is the predicted experimental yield

- β₀ is the intercept

- β₁, β₂, ..., βₙ are the regression coefficients representing the effect of each independent variable (X₁, X₂, ..., Xₙ)

- ε is the error term

Linear regression provides a straightforward equation to predict yield based on the input variables. However, it assumes a linear relationship, which might not always hold true in real-world scenarios.

2. Multiple Linear Regression

This extends linear regression to handle multiple independent variables simultaneously. It's particularly useful when several factors influence the yield. The interpretation of coefficients remains similar to simple linear regression, revealing the impact of each variable on yield, holding others constant.

3. Polynomial Regression

When the relationship between independent variables and yield isn't linear, polynomial regression can be used. This technique fits a curve (a polynomial function) to the data, allowing for more complex relationships. Higher-order polynomials can capture more intricate patterns but increase the risk of overfitting.

4. Non-Linear Regression

For even more complex relationships that cannot be adequately captured by polynomial regression, non-linear regression techniques are employed. These methods use non-linear functions to model the data, offering greater flexibility but often requiring more sophisticated optimization algorithms.

5. Machine Learning Techniques

Machine learning algorithms, such as support vector machines (SVMs), decision trees, random forests, and neural networks, are increasingly used for yield prediction. These methods can handle large datasets, complex relationships, and high dimensionality. They can identify non-linear patterns and often outperform traditional statistical models in situations with noisy or intricate data.

Feature Engineering and Variable Selection

The success of your predictive model heavily relies on feature engineering and variable selection. This involves:

- Identifying Relevant Variables: Carefully consider which factors are likely to significantly influence yield.

- Transforming Variables: Variables might need transformation (e.g., logarithmic, square root) to improve model performance.

- Handling Missing Data: Develop strategies for handling missing data points, such as imputation or removal.

- Feature Scaling: Scaling variables to a similar range can improve the efficiency of some algorithms.

- Feature Selection: Techniques like stepwise regression or regularization can help identify the most important predictors and remove less influential variables, improving model interpretability and preventing overfitting.

Model Evaluation and Validation

Once you've built a predictive model, it's crucial to evaluate its performance and ensure its generalizability. This involves:

- Training and Testing Sets: Divide your data into training and testing sets. The training set is used to build the model, while the testing set is used to evaluate its performance on unseen data.

- Performance Metrics: Use appropriate metrics to assess the model's accuracy, such as R-squared, Mean Squared Error (MSE), Root Mean Squared Error (RMSE), and Mean Absolute Error (MAE).

- Cross-Validation: Techniques like k-fold cross-validation provide a more robust estimate of model performance by training and testing on different subsets of the data.

- Overfitting vs. Underfitting: Be aware of the trade-off between overfitting (the model performs well on the training data but poorly on new data) and underfitting (the model is too simple to capture the underlying patterns).

Iterative Improvement and Optimization

Predictive modeling is an iterative process. Continuously monitor your model's performance, refine your data collection methods, explore new features, and experiment with different algorithms to optimize your predictions. Regular updates and adjustments are essential to maintain accuracy and relevance as new data becomes available or the experimental process evolves.

Beyond Statistical Models: Incorporating Process Knowledge

While statistical models are powerful tools, they shouldn't replace sound experimental design and process knowledge. Incorporate your understanding of the underlying chemical, biological, or engineering principles to guide your model development and interpretation. This expert knowledge can improve feature selection, identify potential biases, and provide insights into model limitations.

Conclusion: The Power of Data-Driven Yield Prediction

Predicting experimental yield accurately is crucial for efficient research and industrial processes. By carefully collecting and analyzing data, employing appropriate statistical modeling techniques, and integrating process knowledge, you can significantly improve your ability to predict yield and optimize experimental outcomes. Remember that “this number” – your key data point – is only one piece of the puzzle. A holistic approach that considers multiple factors, utilizes robust statistical methods, and continuously iterates on the model is essential for achieving highly accurate and reliable yield predictions. The journey towards accurate yield prediction is a continuous process of learning, refinement, and adaptation.

Latest Posts

Latest Posts

-

The Four Ms Of Cause And Effect Diagrams Are

Mar 27, 2025

-

You Re Doing On Page Search Engine

Mar 27, 2025

-

Do Black People Have An Extra Calf Muscle

Mar 27, 2025

-

Determine The Magnitude Of The Pin Force At A

Mar 27, 2025

-

What Is The Advantage Of Using A Wet Mount

Mar 27, 2025

Related Post

Thank you for visiting our website which covers about Using This Number Predict The Experimental Yield . We hope the information provided has been useful to you. Feel free to contact us if you have any questions or need further assistance. See you next time and don't miss to bookmark.