The Svm's Are Less Effective When

Holbox

Mar 14, 2025 · 6 min read

Table of Contents

Support Vector Machines (SVMs): When They Fall Short

Support Vector Machines (SVMs) are powerful and versatile supervised machine learning algorithms renowned for their effectiveness in classification and regression tasks. However, their performance isn't universally superior. This article delves into the scenarios where SVMs demonstrate less effectiveness, exploring the underlying reasons and offering strategies to mitigate these limitations.

1. High Dimensionality and the Curse of Dimensionality

SVMs, while robust, struggle significantly with high-dimensional data. This is largely due to the curse of dimensionality, a phenomenon where the volume of the feature space increases exponentially with the number of features. This leads to several issues:

-

Increased computational complexity: Training an SVM becomes computationally expensive as the number of features grows. The algorithm's time complexity can increase dramatically, making it impractical for datasets with a vast number of features.

-

Overfitting: With numerous features, the SVM may overfit the training data, learning the noise and peculiarities of the specific dataset rather than the underlying patterns. This results in poor generalization to unseen data, leading to low accuracy on test sets.

-

Data sparsity: In high-dimensional spaces, data points tend to be sparsely distributed. This makes it difficult for the SVM to find an effective separating hyperplane, as the algorithm relies on identifying support vectors that define the margin.

Mitigation Strategies:

-

Feature selection/extraction: Techniques like Principal Component Analysis (PCA) or feature selection algorithms can significantly reduce the dimensionality of the data by identifying the most relevant features or creating new, lower-dimensional representations that capture most of the variance.

-

Regularization: Applying regularization techniques, such as L1 or L2 regularization, can help prevent overfitting by penalizing complex models. This encourages the SVM to find simpler, more generalized solutions.

-

Kernel methods: Carefully choosing an appropriate kernel function can help address the curse of dimensionality. Kernels implicitly map the data to a higher-dimensional feature space, but carefully chosen kernels can avoid the computational burden of explicitly working in that space.

2. Noisy Data and Outliers

SVMs are sensitive to noisy data and outliers. These data points can significantly influence the position of the support vectors and the resulting hyperplane, leading to a less accurate and robust model. Outliers, in particular, can pull the decision boundary away from the true underlying data distribution.

Mitigation Strategies:

-

Data cleaning: Before training the SVM, it's crucial to thoroughly clean the dataset. This involves identifying and handling missing values, removing duplicates, and addressing outliers. Outlier detection techniques, such as box plots or scatter plots, can be used to identify and remove or correct problematic data points.

-

Robust loss functions: Instead of the standard hinge loss function, using robust loss functions that are less sensitive to outliers can improve the SVM's performance. These functions assign less weight to outliers, making the model less susceptible to their influence.

-

One-class SVM: If the dataset is heavily contaminated with noise or outliers, a one-class SVM can be used to model the normal data distribution, identifying anomalies as points that lie outside this distribution.

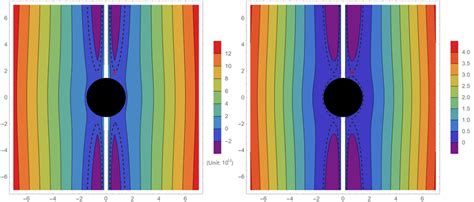

3. Non-Linearly Separable Data

While SVMs can handle non-linearly separable data through the use of kernel functions, their performance can still be limited if the data is highly complex or the chosen kernel is inappropriate. If the kernel does not adequately capture the non-linear relationships within the data, the SVM will struggle to create an effective decision boundary.

Mitigation Strategies:

-

Kernel selection: Choosing the right kernel function is crucial for handling non-linearly separable data. Different kernel functions (e.g., Gaussian radial basis function (RBF), polynomial, sigmoid) have different properties and suit various data distributions. Experimentation and cross-validation are essential to find the optimal kernel for a specific dataset.

-

Data transformation: Pre-processing the data using techniques like polynomial feature expansion or other non-linear transformations might make the data more linearly separable in a higher-dimensional space.

-

Ensemble methods: Combining SVMs with other algorithms or using ensemble methods like bagging or boosting can improve their performance on complex non-linear datasets. Ensemble methods can mitigate the limitations of individual SVMs by combining the predictions of multiple models.

4. Imbalanced Datasets

When the classes in a classification problem are imbalanced (i.e., one class has significantly more samples than others), SVMs may favor the majority class, leading to poor performance on the minority class. This is because SVMs primarily focus on maximizing the margin between classes, and the majority class tends to dominate the margin optimization process.

Mitigation Strategies:

-

Resampling techniques: Addressing class imbalance is crucial. Techniques like oversampling the minority class (e.g., SMOTE – Synthetic Minority Over-sampling Technique) or undersampling the majority class can help balance the dataset.

-

Cost-sensitive learning: Assigning different misclassification costs to different classes during training can help the SVM prioritize the minority class. Higher misclassification costs for the minority class encourage the SVM to focus on correctly classifying these instances.

-

Different evaluation metrics: Using evaluation metrics tailored for imbalanced datasets (e.g., precision, recall, F1-score, AUC-ROC) instead of relying solely on accuracy is crucial to accurately assess the SVM's performance.

5. Computational Cost for Large Datasets

Training SVMs on extremely large datasets can be computationally expensive. The time complexity of training can increase significantly with the number of data points. This can make SVMs impractical for applications dealing with massive datasets.

Mitigation Strategies:

-

Stochastic gradient descent (SGD): Employing optimization algorithms like SGD can significantly reduce the computational cost of training SVMs, making them feasible for large datasets. SGD iteratively updates the model parameters based on small subsets of the data, rather than the entire dataset.

-

Approximate algorithms: Using approximate algorithms can reduce training time at the cost of some accuracy. These algorithms aim to find a good-enough solution more quickly than the exact algorithms.

-

Incremental/Decentralized learning: For extremely large datasets that cannot be processed on a single machine, employing incremental or decentralized learning techniques can distribute the training process across multiple machines, reducing the computational burden on any individual machine.

6. Difficulty in Interpreting Results

While SVMs are powerful predictors, interpreting their results can be challenging. The decision boundary obtained by SVMs, especially with complex kernel functions, is not always easily understandable or interpretable. This can make it difficult to gain insights into the relationships between the features and the outcome.

Mitigation Strategies:

-

Feature importance analysis: Techniques can help determine which features are most important in the SVM's decision-making process. This provides some insights into the model's behaviour.

-

Visualization techniques: Visualizing the decision boundary (for lower-dimensional data) or using techniques like partial dependence plots can offer some visual insights into the model's behaviour and decision-making process.

-

Explainable AI (XAI) techniques: Incorporating XAI techniques, which aim to create more interpretable machine learning models, is an active area of research. Future advancements in XAI might provide improved ways to interpret SVM predictions.

Conclusion

SVMs are a valuable tool in the machine learning arsenal, but their effectiveness depends on the nature of the data and the problem at hand. Understanding the situations where SVMs might underperform, along with the mitigation strategies outlined above, is crucial for successful application of this powerful algorithm. By carefully considering data pre-processing, parameter tuning, and choosing appropriate algorithms and techniques, one can maximize the benefits and minimize the limitations of SVMs in various machine learning tasks. Remember that the optimal approach often involves careful experimentation and validation to identify the best strategies for a given dataset and problem.

Latest Posts

Latest Posts

-

Based On The Values In Cells A51 A55

Mar 14, 2025

-

A Distributor Is Sometimes Referred To As A An Blank

Mar 14, 2025

-

The Wrist Is Blank To The Elbow

Mar 14, 2025

-

Which Eukaryotic Cell Cycle Event Is Missing In Binary Fission

Mar 14, 2025

-

Countries With The Highest Degrees Of Government Bureaucratic Inefficiency Index

Mar 14, 2025

Related Post

Thank you for visiting our website which covers about The Svm's Are Less Effective When . We hope the information provided has been useful to you. Feel free to contact us if you have any questions or need further assistance. See you next time and don't miss to bookmark.