For Needs Met Tasks Some Duplicate Results Are Automatically Detected

Holbox

Mar 17, 2025 · 6 min read

Table of Contents

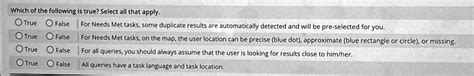

For Needs Met Tasks: Some Duplicate Results Are Automatically Detected

Duplicate content is a significant challenge in many applications, especially those dealing with large datasets or user-generated content. While perfectly acceptable in certain contexts, in others it can significantly harm the user experience, negatively impact search engine optimization (SEO), and even lead to data inconsistencies and inaccuracies. This article delves into the problem of duplicate results in "needs met" tasks, explaining why they occur, how they're detected, and strategies to mitigate or eliminate them.

Understanding "Needs Met" Tasks and Duplicate Results

"Needs met" tasks generally refer to systems designed to identify and fulfill user requests or needs. This could encompass various applications, such as:

- Search Engines: Identifying web pages most relevant to a user's query.

- Recommendation Systems: Suggesting products, movies, or other items based on user preferences and behavior.

- Question Answering Systems: Providing answers to user-posed questions.

- Customer Service Chatbots: Addressing user inquiries and resolving issues.

In these contexts, a "duplicate result" means the system returns multiple nearly identical or substantially similar responses to the same request. These duplicates might manifest in various forms:

- Identical text: The system returns precisely the same text multiple times.

- Near-duplicate text: The system returns responses with minor variations (e.g., different word order, minor paraphrasing) but convey essentially the same information.

- Duplicate links or references: The system presents the same underlying resource multiple times, even if the displayed information differs slightly.

- Redundant information: The system returns multiple responses covering the same ground, potentially in different formats.

Why Duplicate Results Occur in "Needs Met" Tasks

Several factors contribute to the generation of duplicate results in needs met tasks:

1. Data Redundancy in the Underlying Dataset:

Many systems rely on extensive datasets. If this dataset contains redundant or duplicated information, the system is likely to return duplicate results. This is especially common when data is sourced from multiple, uncoordinated sources.

2. Inefficient Data Processing and Retrieval Algorithms:

Poorly designed algorithms might fail to adequately filter out duplicate or near-duplicate results. They may lack robust deduplication mechanisms or prioritize retrieval speed over result uniqueness.

3. Ambiguity in User Queries or Needs:

Ambiguous queries can lead to the system retrieving multiple seemingly relevant but ultimately redundant answers. For example, a broad search query might result in multiple similar articles covering the same topic from different perspectives.

4. Lack of Proper Ranking and Filtering Mechanisms:

Even if duplicates are initially retrieved, a robust ranking system should prioritize unique and higher-quality results, pushing duplicates lower in the results list or eliminating them altogether. Inefficient ranking mechanisms can allow duplicates to persist.

5. Data Updates and Synchronization Issues:

In dynamic systems, updating the underlying data can introduce duplicates if not handled carefully. Inconsistencies between data sources or delayed updates can lead to multiple versions of the same information being available.

Automatic Detection of Duplicate Results

Detecting duplicates is crucial for maintaining the quality and efficiency of "needs met" tasks. Several techniques are employed for automatic detection:

1. Exact Matching:

This is the simplest method, comparing results for identical strings or sequences of characters. It’s effective for detecting perfect duplicates but misses near-duplicates.

2. Simhashing:

Simhashing is a locality-sensitive hashing technique that generates a short "fingerprint" for each piece of text. Similar texts have similar fingerprints, allowing efficient identification of near-duplicates.

3. MinHash:

MinHash is another locality-sensitive hashing algorithm used to estimate the similarity between sets of items. It's particularly effective for detecting near-duplicates based on overlapping sets of words or features.

4. Cosine Similarity:

Cosine similarity measures the angle between two vectors representing the text. High cosine similarity indicates high text similarity, allowing detection of near-duplicates based on semantic meaning.

5. Edit Distance Algorithms (Levenshtein Distance):

These algorithms quantify the minimum number of edits (insertions, deletions, substitutions) required to transform one string into another. A small edit distance suggests high similarity.

6. N-gram Overlap:

This technique compares the frequency of N-grams (sequences of N consecutive words) in different texts. High overlap indicates high similarity.

7. Machine Learning Models:

Advanced machine learning models, such as recurrent neural networks (RNNs) and transformers, can be trained to detect duplicate content based on a large corpus of training data. These models often outperform simpler methods, particularly in detecting semantic duplicates.

Strategies for Mitigating or Eliminating Duplicate Results

Once duplicates are detected, several strategies can mitigate or eliminate them:

1. Data Cleaning and Deduplication:

Before the data is used by the "needs met" system, thoroughly cleaning and deduplicating the underlying dataset is essential. This involves identifying and removing or consolidating duplicate records.

2. Improved Data Retrieval and Ranking Algorithms:

Implementing more sophisticated algorithms that prioritize unique results and effectively filter out duplicates is crucial. This may involve using techniques like ranking by diversity or relevance scores that penalize duplicate results.

3. Query Refinement and Ambiguity Resolution:

Improving the query processing system to better understand user intent and resolve ambiguities can help reduce the generation of redundant answers. This might involve using natural language processing (NLP) techniques to disambiguate queries.

4. Result Aggregation and Summarization:

Instead of returning multiple similar results, aggregating or summarizing the information from multiple sources into a single, comprehensive answer can improve the user experience.

5. Implementing a Robust Deduplication System:

Building a dedicated deduplication system that filters out duplicate results before they are presented to the user is highly effective. This system can incorporate various techniques mentioned above.

6. Regular Monitoring and Evaluation:

Regularly monitoring the system's performance and evaluating the frequency of duplicate results is essential to identify and address emerging issues. This includes analyzing user feedback and performing automated checks.

The Importance of Context in Duplicate Detection

It's vital to understand that the appropriateness of duplicate detection depends heavily on context. In some scenarios, near-duplicates might be acceptable or even desirable:

- News aggregation: Presenting multiple news sources reporting on the same event can provide diverse perspectives.

- Product comparison sites: Showing multiple listings for the same product from different retailers can aid user decision-making.

- Educational resources: Providing multiple explanations of the same concept using different approaches might enhance understanding.

Therefore, a robust duplicate detection system should be configurable to account for context-specific requirements. This might involve setting thresholds for similarity scores, adjusting algorithms, or using different deduplication strategies for various types of "needs met" tasks.

Future Directions in Duplicate Result Detection

Research continues to improve duplicate detection and mitigation techniques. Promising areas of future development include:

- Improved semantic understanding: Developing algorithms that more accurately capture the semantic meaning of text to identify near-duplicates based on meaning, not just literal similarity.

- Context-aware deduplication: Creating more adaptive systems that adjust their deduplication strategies based on the context of the task and user needs.

- Interactive deduplication: Allowing users to provide feedback on duplicate results, enhancing the system's learning and accuracy over time.

- Cross-lingual duplicate detection: Extending duplicate detection capabilities to handle multiple languages, a significant challenge due to differences in syntax and semantics.

In conclusion, the problem of duplicate results in "needs met" tasks is a complex one, requiring a multifaceted approach involving data cleaning, efficient algorithms, sophisticated detection techniques, and context-aware strategies. By effectively addressing this issue, system developers can significantly enhance the user experience, improve the efficiency of their systems, and achieve better overall performance. The ongoing development of improved algorithms and techniques promises to further refine the process of identifying and mitigating duplicate results in the future.

Latest Posts

Latest Posts

-

A Winning Strategy Is One That

Mar 17, 2025

-

The Objective Of Inventory Management Is To

Mar 17, 2025

-

A Bond Is Issued At Par Value When

Mar 17, 2025

-

Which Statement Best Summarizes The Importance Of Meiosis To Reproduction

Mar 17, 2025

-

A Company Pledges Its Receivables So It Can

Mar 17, 2025

Related Post

Thank you for visiting our website which covers about For Needs Met Tasks Some Duplicate Results Are Automatically Detected . We hope the information provided has been useful to you. Feel free to contact us if you have any questions or need further assistance. See you next time and don't miss to bookmark.