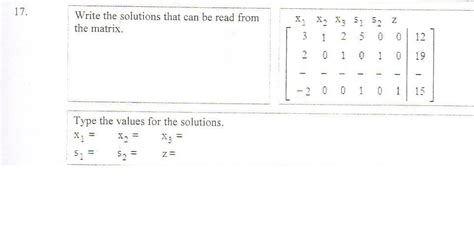

Write The Solutions That Can Be Read From The Matrix

Holbox

Mar 13, 2025 · 6 min read

Table of Contents

- Write The Solutions That Can Be Read From The Matrix

- Table of Contents

- Reading Solutions from the Matrix: A Comprehensive Guide

- Understanding Matrices and Their Applications

- Methods for Extracting Solutions from Matrices

- 1. Solving Systems of Linear Equations

- 2. Eigenvalue and Eigenvector Analysis

- 3. Singular Value Decomposition (SVD)

- 4. Linear Programming and Optimization

- 5. Graph Theory and Network Analysis

- Interpreting Results and Drawing Conclusions

- Advanced Techniques and Considerations

- Conclusion

- Latest Posts

- Related Post

Reading Solutions from the Matrix: A Comprehensive Guide

The concept of "reading solutions from a matrix" is surprisingly broad, applicable to numerous fields including mathematics, data science, operations research, and even strategic planning. While the specific methods vary depending on the context, the underlying principle remains consistent: extracting meaningful information and actionable insights from a structured dataset represented in a matrix format. This article delves into various techniques and applications, providing a comprehensive guide to unlock the hidden potential within your matrices.

Understanding Matrices and Their Applications

Before exploring solution extraction methods, let's establish a foundational understanding of matrices. A matrix is a rectangular array of numbers, symbols, or expressions, arranged in rows and columns. These elements are often referred to as entries or components. The size of a matrix is defined by its number of rows (m) and columns (n), denoted as an m x n matrix.

Matrices find extensive use in:

- Linear Algebra: Solving systems of linear equations, performing transformations, representing linear mappings.

- Data Science: Representing datasets (e.g., feature matrices in machine learning), performing dimensionality reduction (PCA), clustering.

- Computer Graphics: Representing transformations (rotation, scaling, translation), projecting 3D objects onto 2D screens.

- Operations Research: Modeling linear programming problems, representing networks and flows.

- Engineering: Analyzing structural systems, solving circuit problems.

- Economics: Input-output analysis, modeling economic systems.

Methods for Extracting Solutions from Matrices

The specific method employed to extract solutions from a matrix depends heavily on the nature of the matrix and the problem being addressed. Let's explore some key techniques:

1. Solving Systems of Linear Equations

One of the most common applications of matrices is in solving systems of linear equations. A system of m linear equations with n unknowns can be represented in matrix form as Ax = b, where:

- A is the coefficient matrix (m x n)

- x is the vector of unknowns (n x 1)

- b is the vector of constants (m x 1)

Several methods exist for solving this system:

-

Gaussian Elimination: A systematic process of transforming the augmented matrix [A|b] into row echelon form or reduced row echelon form to obtain the solution for x. This is a fundamental technique and forms the basis for many other algorithms.

-

LU Decomposition: Decomposing the matrix A into a lower triangular matrix (L) and an upper triangular matrix (U), such that A = LU. This simplifies the solution process, as solving Ly = b and Ux = y is computationally less expensive than directly solving Ax = b.

-

Cholesky Decomposition: A special case of LU decomposition applicable only to symmetric, positive definite matrices. It's computationally efficient and numerically stable.

-

Matrix Inversion: If the matrix A is square and invertible (i.e., its determinant is non-zero), the solution is given by x = A⁻¹b, where A⁻¹ is the inverse of A. However, matrix inversion can be computationally expensive for large matrices and may be numerically unstable.

-

Iterative Methods: For very large systems of equations, iterative methods like Jacobi, Gauss-Seidel, and successive over-relaxation (SOR) are often preferred. These methods iteratively refine an initial guess until a solution is reached within a specified tolerance.

2. Eigenvalue and Eigenvector Analysis

Eigenvalues and eigenvectors provide crucial information about the properties of a matrix. An eigenvector v of a square matrix A satisfies the equation Av = λv, where λ is the corresponding eigenvalue. Eigenvalue analysis is vital in numerous applications:

-

Principal Component Analysis (PCA): Used in dimensionality reduction, extracting the most significant features from a dataset. The eigenvectors corresponding to the largest eigenvalues represent the principal components.

-

Stability Analysis: In dynamical systems, eigenvalues determine the stability of equilibrium points. Eigenvalues with positive real parts indicate instability, while those with negative real parts indicate stability.

-

Markov Chains: Eigenvalues and eigenvectors are used to find the stationary distribution of a Markov chain, representing the long-term probabilities of being in different states.

-

Spectral Clustering: A graph clustering technique that uses the eigenvectors of the Laplacian matrix to partition the graph into clusters.

3. Singular Value Decomposition (SVD)

SVD decomposes a rectangular matrix A into three matrices: U, Σ, and Vᵀ, such that A = UΣVᵀ, where:

- U is an orthogonal matrix whose columns are the left singular vectors of A.

- Σ is a diagonal matrix whose entries are the singular values of A.

- Vᵀ is the transpose of an orthogonal matrix whose columns are the right singular vectors of A.

SVD has many applications, including:

- Recommendation Systems: Used in collaborative filtering to predict user preferences based on past interactions.

- Image Compression: Reducing the size of images by discarding less significant singular values.

- Noise Reduction: Filtering out noise from data by setting small singular values to zero.

- Dimensionality Reduction: Similar to PCA, SVD can be used to reduce the dimensionality of data.

4. Linear Programming and Optimization

Matrices play a central role in linear programming, a technique for optimizing a linear objective function subject to linear constraints. The problem can be formulated as:

- Maximize (or Minimize) cᵀx

- Subject to Ax ≤ b, x ≥ 0

where:

- c is the cost vector

- x is the vector of decision variables

- A is the constraint matrix

- b is the vector of resources

Solving linear programming problems often involves using algorithms like the simplex method or interior-point methods. These methods utilize matrix operations to find the optimal solution.

5. Graph Theory and Network Analysis

Matrices can effectively represent graphs and networks. The adjacency matrix represents the connections between nodes in a graph, where an entry (i,j) is 1 if there's an edge between node i and node j, and 0 otherwise. Other matrices like the Laplacian matrix are used for various graph-theoretic calculations. Applications include:

- Shortest Path Algorithms: Finding the shortest path between nodes in a network (e.g., Dijkstra's algorithm).

- Community Detection: Identifying clusters or communities within a network.

- Centrality Measures: Assessing the importance of nodes within a network (e.g., degree centrality, betweenness centrality).

Interpreting Results and Drawing Conclusions

Once you've extracted solutions from your matrix using the appropriate methods, the next crucial step is to interpret the results and draw meaningful conclusions. This involves:

- Understanding the context: Relate the numerical solutions back to the original problem or dataset.

- Visualizing the data: Create charts and graphs to aid in understanding the results.

- Statistical analysis: Perform statistical tests to assess the significance of the findings.

- Sensitivity analysis: Evaluate how changes in the input data affect the results.

- Validating the results: Compare the solutions with real-world observations or expectations.

Advanced Techniques and Considerations

-

Sparse Matrices: For matrices with a large number of zero entries, specialized algorithms and data structures are employed to improve efficiency.

-

Parallel Computing: Large matrix operations can be parallelized to reduce computation time.

-

Numerical Stability: Careful consideration of numerical errors is crucial, especially when dealing with ill-conditioned matrices.

-

Software Tools: Various software packages like MATLAB, Python (with NumPy and SciPy), R, and others provide extensive functionality for matrix operations and analysis.

Conclusion

Reading solutions from a matrix is a powerful technique with widespread applications across diverse fields. The choice of method depends heavily on the specific problem and the nature of the matrix. By mastering these techniques and understanding their implications, you can unlock valuable insights from your data and make informed decisions based on data-driven analysis. Remember that the process extends beyond mere calculation; the interpretation and contextualization of the results are equally crucial in extracting true meaning and actionable solutions. Continuous learning and exploration of advanced techniques will further enhance your ability to leverage the power of matrices for effective problem-solving.

Latest Posts

Related Post

Thank you for visiting our website which covers about Write The Solutions That Can Be Read From The Matrix . We hope the information provided has been useful to you. Feel free to contact us if you have any questions or need further assistance. See you next time and don't miss to bookmark.