What Purpose Do Fairness Measures Serve In Ai Product Development

Holbox

Mar 11, 2025 · 6 min read

Table of Contents

What Purpose Do Fairness Measures Serve in AI Product Development?

The rapid advancement of artificial intelligence (AI) has ushered in an era of unprecedented technological possibilities. AI systems are being deployed across various sectors, impacting everything from healthcare and finance to criminal justice and education. However, the increasing reliance on AI also raises significant ethical concerns, particularly regarding fairness and bias. This article delves into the crucial role of fairness measures in AI product development, exploring their purpose and the multifaceted challenges involved in ensuring equitable outcomes.

The Importance of Fairness in AI

AI systems learn from data, and if that data reflects existing societal biases, the AI will inevitably perpetuate and even amplify those biases. This can lead to discriminatory outcomes, disproportionately affecting marginalized groups. Consider these examples:

- Facial Recognition: Studies have shown that facial recognition systems perform significantly worse at identifying individuals with darker skin tones, leading to potential misidentification and wrongful arrests.

- Loan Applications: AI-powered loan applications might unfairly deny loans to individuals from certain demographic groups due to biases present in the historical loan data used for training.

- Hiring Processes: AI-driven recruitment tools could discriminate against candidates based on gender, race, or other protected characteristics if trained on data reflecting historical hiring biases.

These examples highlight the potential for AI systems to exacerbate existing inequalities, undermining trust and creating significant social and economic harm. The incorporation of fairness measures is therefore not merely a desirable feature, but a fundamental necessity for responsible AI development.

The Purpose of Fairness Measures

Fairness measures in AI product development serve several crucial purposes:

1. Mitigating Bias and Discrimination

The primary purpose of fairness measures is to identify and mitigate biases embedded within the data and algorithms used to build AI systems. This involves employing various techniques to detect and correct biases, ensuring that the AI system treats all individuals fairly, regardless of their protected characteristics. This is crucial for preventing discriminatory outcomes and promoting social justice.

2. Enhancing Transparency and Explainability

Many AI systems, particularly deep learning models, are often described as "black boxes," making it difficult to understand how they arrive at their decisions. Fairness measures promote transparency by providing insights into the decision-making process, allowing developers to identify potential sources of bias and understand why certain outcomes are produced. This explainability is essential for building trust and accountability.

3. Promoting Accountability and Responsibility

By incorporating fairness measures, developers take responsibility for the potential impact of their AI systems. This involves actively monitoring the performance of the system, identifying any instances of bias or discrimination, and taking corrective action. This commitment to accountability fosters trust among users and stakeholders.

4. Ensuring Equitable Outcomes

Ultimately, the goal of fairness measures is to ensure that AI systems produce equitable outcomes across different demographic groups. This means that the benefits and risks associated with the AI system are distributed fairly, preventing any group from being disproportionately disadvantaged.

Defining Fairness: A Multifaceted Challenge

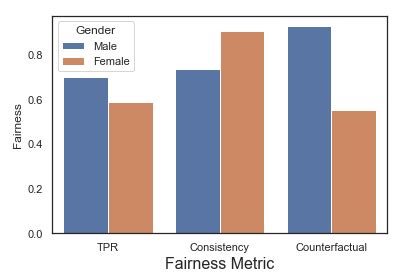

Defining fairness in the context of AI is far from straightforward. There is no single, universally accepted definition, and the concept of fairness can vary depending on the specific application and context. Different fairness metrics might prioritize different aspects of fairness, leading to potential trade-offs. Some commonly used fairness metrics include:

- Demographic Parity: Ensuring that the positive outcomes (e.g., loan approvals, job offers) are distributed proportionally across different demographic groups.

- Equalized Odds: Requiring that the AI system makes accurate predictions for all groups with equal probability.

- Predictive Rate Parity: Focusing on the positive predictive value (the proportion of true positives among all predicted positives) being equal across groups.

- Counterfactual Fairness: Considering whether the outcome would have been different if the individual belonged to a different demographic group.

The choice of which fairness metric to use depends on the specific application and the values prioritized by the developers and stakeholders. There might be unavoidable trade-offs between different fairness metrics, making the selection process a complex and nuanced one.

Implementing Fairness Measures: A Practical Approach

Implementing fairness measures requires a multifaceted approach throughout the entire AI development lifecycle:

1. Data Collection and Preprocessing:

- Addressing Data Bias: Carefully examine the data used to train the AI system for potential biases. This includes checking for underrepresentation of certain demographic groups and the presence of biased features.

- Data Augmentation: Supplement the dataset with additional data to balance the representation of different groups and reduce the impact of existing biases.

- Feature Engineering: Select and engineer features carefully, avoiding features that could perpetuate existing biases.

2. Algorithm Selection and Training:

- Fairness-Aware Algorithms: Employ algorithms designed to explicitly incorporate fairness constraints into the training process.

- Regularization Techniques: Utilize regularization techniques to penalize models that exhibit discriminatory behavior.

- Adversarial Training: Train the model to be robust against adversarial attacks that aim to exploit biases.

3. Model Evaluation and Monitoring:

- Fairness Metrics: Employ a range of fairness metrics to evaluate the model's performance across different demographic groups.

- Bias Detection Tools: Utilize bias detection tools to identify potential sources of bias within the model.

- Ongoing Monitoring: Continuously monitor the model's performance over time to detect and address any emerging biases.

Addressing the Challenges of Fairness in AI

Despite the increasing awareness of the importance of fairness in AI, significant challenges remain:

- Defining Fairness: The lack of a universally accepted definition of fairness makes it difficult to establish clear benchmarks and evaluate progress.

- Trade-offs Between Fairness and Accuracy: Improving fairness can sometimes lead to a decrease in accuracy, requiring careful consideration of the trade-offs involved.

- Technical Complexity: Implementing fairness measures can be technically challenging, requiring specialized expertise and sophisticated tools.

- Data Scarcity: The lack of representative data for certain demographic groups can hinder the development of fair AI systems.

- Lack of Standardization: The lack of standardization in fairness metrics and techniques makes it difficult to compare and evaluate different approaches.

Conclusion: Towards a Fairer Future with AI

Ensuring fairness in AI is not simply a technical challenge; it's a fundamental ethical and social responsibility. The purpose of fairness measures is to prevent AI systems from perpetuating and amplifying existing biases, leading to discriminatory outcomes and undermining trust. Implementing fairness measures requires a multifaceted approach that involves careful data collection, algorithm selection, model evaluation, and ongoing monitoring. While significant challenges remain, the pursuit of fairness in AI is a crucial step towards creating a more equitable and just future. By embracing fairness as a core principle in AI development, we can harness the transformative potential of this technology while mitigating its risks and ensuring that its benefits are shared by all. The journey towards fair AI is ongoing, but it is a journey that we must undertake with determination and a commitment to building a more inclusive and equitable future.

Latest Posts

Latest Posts

-

Which Point Of The Beam Experiences The Most Compression

Mar 11, 2025

-

Bates Guide To Physical Examination And History Taking

Mar 11, 2025

-

Which Proprioceptive Organ Is Targeted During Myofascial Release Techniques

Mar 11, 2025

-

As A Responsible Server Why Is It Important

Mar 11, 2025

-

What Are Three Benefits Of Opting In To Automatically Apply Recommendations

Mar 11, 2025

Related Post

Thank you for visiting our website which covers about What Purpose Do Fairness Measures Serve In Ai Product Development . We hope the information provided has been useful to you. Feel free to contact us if you have any questions or need further assistance. See you next time and don't miss to bookmark.