What Challenge Does Generative Ai Face With Respect To Data

Holbox

Mar 11, 2025 · 6 min read

Table of Contents

- What Challenge Does Generative Ai Face With Respect To Data

- Table of Contents

- What Challenges Does Generative AI Face With Respect to Data?

- The Data Hunger of Generative AI Models

- 1. Data Scarcity in Specific Domains:

- 2. Data Bias and its Propagation:

- 3. The Problem of Noisy and Inconsistent Data:

- 4. Data Privacy and Security Concerns:

- 5. The High Cost of Data Acquisition and Annotation:

- 6. Data Drift and Model Degradation:

- Addressing the Data Challenges of Generative AI: Strategies and Solutions

- 1. Data Augmentation Techniques:

- 2. Synthetic Data Generation:

- 3. Federated Learning:

- 4. Transfer Learning:

- 5. Data Governance and Ethical Frameworks:

- 6. Active Learning and Human-in-the-Loop Approaches:

- 7. Data Quality Assurance and Validation:

- Conclusion: Navigating the Data Landscape of Generative AI

- Latest Posts

- Related Post

What Challenges Does Generative AI Face With Respect to Data?

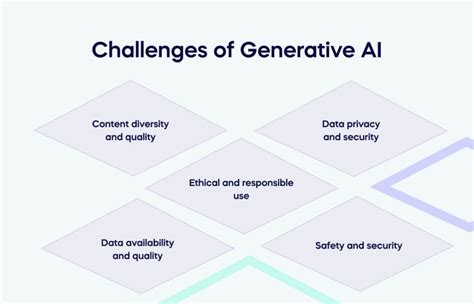

Generative AI, the captivating technology capable of creating novel content ranging from text and images to music and code, is rapidly transforming various industries. However, this transformative power is intrinsically linked to the data it consumes. The challenges generative AI faces with respect to data are multifaceted and significant, hindering its progress and raising ethical concerns. This article delves deep into these challenges, exploring the issues surrounding data quality, bias, privacy, security, and the sheer scale of data required for effective training.

The Data Hunger of Generative AI Models

At the heart of generative AI's capabilities lies its training data. These models, often based on sophisticated architectures like transformers and GANs (Generative Adversarial Networks), require massive datasets to learn patterns, relationships, and the underlying structure of the information they process. This insatiable appetite for data presents several formidable challenges:

1. Data Scarcity in Specific Domains:

While vast quantities of data exist online, access to high-quality, relevant data within specific domains remains a significant hurdle. Training a generative AI model for a niche application, such as designing specialized medical equipment or creating highly realistic simulations of rare geological formations, requires highly curated and often scarce datasets. Acquiring, cleaning, and annotating this data can be time-consuming, expensive, and sometimes simply impossible.

2. Data Bias and its Propagation:

Generative AI models are trained on existing data, which often reflects existing societal biases. This can lead to the perpetuation and even amplification of these biases in the generated content. For example, a language model trained on a dataset with a disproportionate representation of one gender might generate text that consistently favors that gender, reinforcing harmful stereotypes. The consequences of biased AI systems can be far-reaching, affecting decision-making processes in areas like hiring, loan applications, and even criminal justice. Mitigating bias requires careful data curation, algorithmic adjustments, and ongoing monitoring of the generated output.

3. The Problem of Noisy and Inconsistent Data:

The internet, a primary source of training data for many generative AI models, is rife with inconsistencies, inaccuracies, and outright misinformation. Training models on noisy data can lead to unpredictable and unreliable results. The model might learn to generate outputs that are factually incorrect, illogical, or even harmful. Robust data cleaning and preprocessing techniques are crucial to minimize the impact of noisy data, but completely eliminating it is often a near-impossible task. This necessitates rigorous validation and verification processes post-training.

4. Data Privacy and Security Concerns:

Training generative AI models often involves handling sensitive personal information. This raises serious concerns about data privacy and security. If the training data contains protected health information, financial records, or other sensitive data, there's a risk of data breaches and unauthorized access. Furthermore, the generated output itself might inadvertently reveal sensitive information, leading to privacy violations. Strict data anonymization techniques, secure data storage practices, and robust privacy-preserving training methods are essential to address these concerns. Compliance with relevant regulations like GDPR and CCPA is paramount.

5. The High Cost of Data Acquisition and Annotation:

Acquiring and preparing large datasets for training can be incredibly expensive. The cost includes not only the acquisition of the raw data but also the significant labor involved in cleaning, annotating, and organizing it. Data annotation, in particular, often requires specialized expertise and can be a time-consuming process. The high cost of data acts as a barrier to entry for smaller organizations and researchers, potentially exacerbating existing inequalities in the field. Innovative approaches to data acquisition and annotation are needed to make generative AI more accessible.

6. Data Drift and Model Degradation:

The real world is constantly evolving. Data distributions change over time, a phenomenon known as data drift. If a generative AI model is trained on outdated data, its performance can degrade significantly. The model might struggle to generate relevant and accurate outputs, becoming increasingly unreliable. Regular retraining and adaptation of the model to reflect new data are crucial to maintain its effectiveness and accuracy. This continuous learning process poses significant challenges in terms of computational resources and data management.

Addressing the Data Challenges of Generative AI: Strategies and Solutions

The challenges outlined above are not insurmountable. Significant progress is being made in addressing them, although solutions often involve trade-offs. Several promising strategies are emerging:

1. Data Augmentation Techniques:

Data augmentation involves artificially increasing the size of the training dataset by creating modified versions of existing data points. For example, images can be rotated, flipped, or slightly altered to create new training examples. This helps to address the problem of data scarcity while potentially mitigating bias by increasing diversity in the dataset.

2. Synthetic Data Generation:

Generating synthetic data, which mimics the characteristics of real data but does not contain sensitive information, is another promising approach. This allows researchers to train generative AI models on large datasets without compromising privacy or security. However, ensuring that synthetic data accurately reflects the nuances and complexities of real-world data is crucial for the model's effectiveness.

3. Federated Learning:

Federated learning allows multiple parties to collaboratively train a shared model without directly sharing their data. This preserves data privacy while enabling the development of more powerful and accurate generative AI models. This decentralized approach is particularly valuable in situations where data is distributed across different organizations or individuals.

4. Transfer Learning:

Transfer learning involves leveraging pre-trained models trained on large, general-purpose datasets to fine-tune them for specific tasks. This reduces the amount of data needed for training while accelerating the process and potentially improving performance. Transfer learning is particularly useful when dealing with limited data in specific domains.

5. Data Governance and Ethical Frameworks:

Establishing robust data governance policies and ethical frameworks is crucial to address concerns about data privacy, security, and bias. This involves developing clear guidelines for data collection, usage, and storage, as well as implementing mechanisms for monitoring and mitigating bias in generated outputs. Transparency and accountability are essential to ensure responsible development and deployment of generative AI systems.

6. Active Learning and Human-in-the-Loop Approaches:

Active learning involves strategically selecting the most informative data points for annotation, reducing the overall annotation cost. Human-in-the-loop approaches involve incorporating human feedback during the training process to guide the model and correct errors. These techniques improve the efficiency and accuracy of the training process, leading to better-performing models.

7. Data Quality Assurance and Validation:

Implementing rigorous data quality assurance and validation procedures is critical to ensure that the training data is clean, accurate, and representative. This involves developing automated data cleaning tools and establishing systematic processes for verifying the quality of the data before training.

Conclusion: Navigating the Data Landscape of Generative AI

The data challenges faced by generative AI are significant but not insurmountable. By adopting innovative data acquisition, preprocessing, and training techniques, combined with a strong focus on data governance and ethics, we can unlock the full potential of generative AI while mitigating its risks. The journey towards robust and responsible generative AI requires a collaborative effort from researchers, developers, policymakers, and the broader community. Addressing these data-centric challenges will be pivotal in determining the future trajectory and societal impact of this transformative technology. Ongoing research and development are vital to refine these strategies and explore new approaches to data management and utilization in the rapidly evolving field of generative AI. The future of this powerful technology hinges on our ability to effectively and ethically navigate the complex landscape of data.

Latest Posts

Related Post

Thank you for visiting our website which covers about What Challenge Does Generative Ai Face With Respect To Data . We hope the information provided has been useful to you. Feel free to contact us if you have any questions or need further assistance. See you next time and don't miss to bookmark.